Nvidia Plans Massive $500B AI Server Expansion in the U.S.

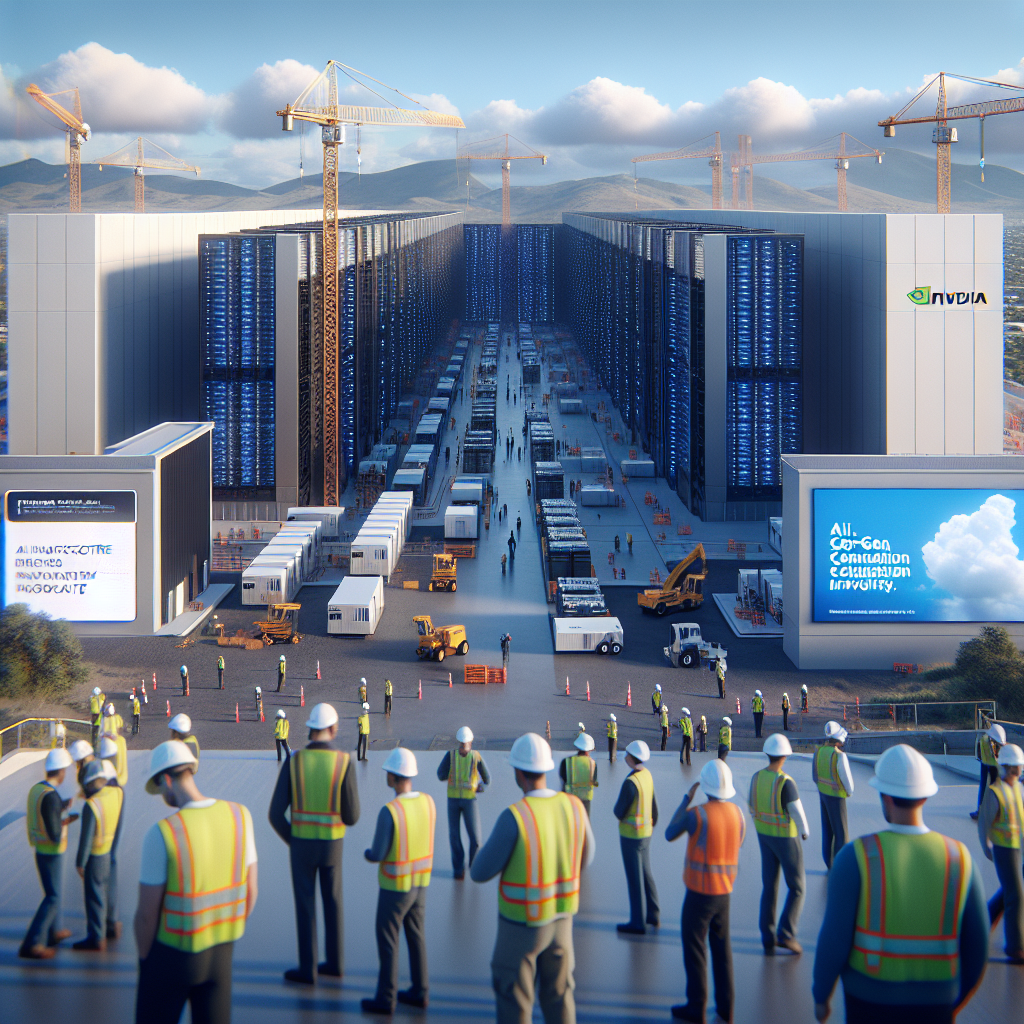

In a bold move that signals the rapid growth of artificial intelligence infrastructure, Nvidia has announced plans to build AI servers worth up to $500 billion in the United States over the next few years. This massive investment highlights the company’s dominant position in AI computing and its commitment to expanding American technological capabilities.

Nvidia’s Ambitious Expansion Strategy

Nvidia CEO Jensen Huang revealed this ambitious plan during the company’s recent investor day. The tech giant aims to build these servers specifically to meet the soaring demand for AI computing resources. This expansion represents one of the largest infrastructure investments in technology history and will likely reshape the AI landscape for years to come.

The company plans to partner with major cloud service providers and data center operators across the United States. Together, they will create a network of high-performance computing facilities powered by Nvidia’s cutting-edge chips and technologies. This collaborative approach will help accelerate the deployment timeline while spreading the investment costs.

According to industry analysts, this expansion could create thousands of high-skilled jobs in manufacturing, engineering, and data center operations. Furthermore, it will strengthen America’s position in the global AI race against competitors like China.

The Driving Forces Behind the AI Server Boom

Several key factors are driving Nvidia’s massive investment in AI server infrastructure:

Explosive Growth in AI Applications

The past year has seen unprecedented adoption of AI technologies across industries. From generative AI tools like ChatGPT to sophisticated business applications and scientific research, the demand for AI computing power continues to surge. Gartner predicts that the global AI software market will reach $135 billion in 2023, representing a 21.3% increase from 2022.

Large language models (LLMs) and other AI systems require enormous computational resources for both training and inference. For instance, training a single large AI model can cost tens of millions of dollars in computing resources alone. Nvidia’s expansion aims to meet this exploding demand while making AI computing more accessible and affordable.

Chip Shortage and Supply Chain Concerns

The global semiconductor shortage has highlighted vulnerabilities in the tech supply chain. By investing heavily in U.S.-based infrastructure, Nvidia is working to ensure reliable access to the computing resources needed for AI development. This domestic focus also aligns with growing government concerns about technology sovereignty and national security.

The expansion comes amid ongoing efforts by the U.S. government to boost domestic chip production through initiatives like the CHIPS Act. Nvidia’s investment complements these efforts by focusing on the downstream infrastructure that will utilize these chips.

Competition and Market Position

Nvidia currently dominates the AI chip market with its graphics processing units (GPUs) and specialized AI accelerators. However, competitors like AMD, Intel, and various startups are working hard to catch up. This massive investment helps Nvidia cement its leadership position while creating deeper relationships with key customers.

By building and operating these server farms, Nvidia also expands beyond its traditional role as a chip supplier. The company increasingly positions itself as a comprehensive AI solutions provider with both hardware and software offerings.

Technical Details of the Expansion

The planned server infrastructure will feature Nvidia’s latest technologies and innovations:

Next-Generation GPU Architecture

At the heart of these new servers will be Nvidia’s advanced GPU chips. The company recently launched its Blackwell architecture, which offers significant performance improvements over previous generations. These chips deliver 4x better performance than their predecessors while using less power, making them ideal for large-scale AI deployments.

The Blackwell GPUs can process enormous amounts of data simultaneously, essential for training and running sophisticated AI models. Each server will contain multiple GPUs working together through Nvidia’s NVLink interconnect technology, creating a unified computational powerhouse.

Software and AI Platforms

Beyond hardware, Nvidia will deploy its comprehensive software stack including CUDA, TensorRT, and other AI development tools. These software platforms allow developers to efficiently use the underlying hardware and optimize their AI applications for maximum performance.

The expansion will also incorporate Nvidia’s AI Enterprise software suite, which helps businesses deploy and manage AI workloads at scale. This integration of hardware and software creates a complete ecosystem for AI development and deployment.

Infrastructure and Energy Considerations

Building $500 billion worth of servers requires massive physical infrastructure. The planned facilities will need:

- Advanced cooling systems to manage heat from thousands of high-performance chips

- Redundant power systems to ensure reliability

- High-speed networking to connect computing resources

- Physical security measures to protect valuable assets

Energy efficiency remains a critical concern for such large computing facilities. Nvidia has therefore focused on improving the performance-per-watt metrics of its new chips. Additionally, many of the planned facilities will incorporate renewable energy sources to reduce their environmental impact.

Economic and Strategic Impact

The economic implications of Nvidia’s expansion extend far beyond the company itself:

Job Creation and Economic Growth

This massive investment will create jobs across multiple sectors. Construction workers will build the physical facilities. Engineers will design and maintain the systems. Technicians will manage day-to-day operations. Additionally, the increased AI computing capacity will enable new startups and business opportunities that further boost economic activity.

Local communities hosting these facilities will benefit from tax revenue and increased economic activity. The expansion will likely target areas with favorable energy costs, available land, and supportive regulatory environments.

National Security Implications

AI computing power increasingly represents a strategic national asset. Countries with advanced AI capabilities gain advantages in economic competitiveness, scientific research, and defense applications. By building this infrastructure within the United States, Nvidia helps strengthen America’s technological leadership position.

The Biden administration has emphasized the importance of maintaining leadership in critical technologies like AI. This private-sector investment aligns with that national priority and complements public initiatives like the National AI Research Resource.

Industry Transformation

Nvidia’s expansion will accelerate the transformation of multiple industries through AI:

- Healthcare: Advanced AI models for drug discovery, medical imaging, and personalized medicine

- Financial services: Sophisticated risk models, fraud detection, and algorithmic trading

- Manufacturing: AI-powered automation, quality control, and supply chain optimization

- Transportation: Self-driving vehicle development and smart logistics

- Energy: Grid optimization and climate modeling

Each of these applications requires massive computing resources that will become more accessible through this expansion. The resulting innovations could generate trillions in economic value while addressing critical societal challenges.

Challenges and Considerations

Despite its tremendous potential, Nvidia’s expansion plan faces several significant challenges:

Regulatory and Environmental Concerns

Large data centers face increasing scrutiny regarding their environmental impact, particularly water usage for cooling and electricity consumption. Nvidia will need to address these concerns through efficient designs and sustainable practices to gain community support and regulatory approvals.

Additionally, the company may face antitrust considerations as its dominance in AI infrastructure grows. Regulators are increasingly concerned about concentration of power in the tech industry, which could lead to additional oversight or restrictions.

Execution Risks

Building infrastructure at this scale involves complex project management and coordination. Any delays in construction, chip production, or software development could impact the timeline. The company must also navigate global supply chain challenges that have affected the entire tech industry in recent years.

Maintaining consistent quality across dozens of facilities while scaling rapidly presents another challenge. Nvidia will need robust quality control processes and standardized designs to ensure reliability.

Market and Demand Uncertainties

While AI demand currently appears insatiable, technology markets can change rapidly. Nvidia is making a significant bet that AI computing needs will continue growing at current rates. Any slowdown in AI adoption or breakthroughs in alternative computing approaches could affect the return on this massive investment.

Furthermore, this expansion occurs amid intense competition, with cloud providers building their own custom chips and startups developing new AI accelerators. Nvidia must continue innovating to maintain its technological edge throughout this multi-year buildout.

Looking to the Future

Nvidia’s $500 billion expansion represents more than just a business investment—it signals a fundamental shift in computing infrastructure. As AI becomes increasingly central to business operations, scientific research, and everyday life, the demand for specialized computing resources will continue growing.

This expansion will likely accelerate AI adoption across industries by making powerful computing resources more accessible. Organizations that previously couldn’t afford AI development may find new opportunities as infrastructure costs decrease and capabilities increase.

For Nvidia, the initiative transforms its business model from primarily selling chips to providing comprehensive AI infrastructure services. This evolution mirrors similar shifts we’ve seen in cloud computing, where hardware providers increasingly offer full-stack solutions.

Conclusion

Nvidia’s planned $500 billion investment in U.S.-based AI servers represents a watershed moment for artificial intelligence infrastructure. The scale of this expansion underscores both the enormous current demand for AI computing resources and expectations of continued explosive growth.

As these servers come online over the next few years, they will enable new AI applications across industries while strengthening America’s position in a critical technology sector. The economic impacts will extend from direct job creation to entirely new business models and innovations powered by AI.

While challenges remain in executing such an ambitious plan, Nvidia’s track record of technological leadership and strong market position provide a solid foundation. The company’s vision of ubiquitous AI computing power takes a major step forward with this historic investment.

What are your thoughts on Nvidia’s massive expansion plans? How might increased AI computing capacity affect your industry or organization? Share your perspectives in the comments below!

References

- The Globe and Mail – Nvidia to build AI servers worth up to US$500-billion in U.S. over next few years

- Gartner – Worldwide Artificial Intelligence Software Market Forecast

- Nvidia Data Center Solutions

- White House Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence

- McKinsey – The State of AI in 2023