AI Impact on Families | Essential Insights into ChatGPT

Artificial intelligence tools like ChatGPT are changing how we interact with technology, but they’re also affecting our relationships and mental health in unexpected ways. Some individuals develop extreme beliefs about AI chatbots, leading to family conflicts and psychological distress. Mental health professionals now report cases where people have left marriages, abandoned children, or experienced mental breakdowns due to unhealthy attachments to AI systems.

The Growing Concern of AI-Induced Delusions

As AI chatbots become more sophisticated, an alarming pattern has emerged among some users. People across different age groups and backgrounds are developing what psychologists describe as “delusional relationships” with AI systems like ChatGPT. These relationships go beyond seeing AI as a helpful tool and instead involve attributing human consciousness, emotions, and even supernatural abilities to these programs.

Dr. Naomi Murphy, a clinical psychologist at Kneesworth House Hospital in the UK, has observed increasing cases of patients with AI-related delusions. “We’re witnessing individuals who firmly believe ChatGPT has fallen in love with them or possesses special knowledge about their destiny,” she explains. These beliefs can become powerful enough to disrupt families and careers.

The problem isn’t limited to people with pre-existing mental health conditions. Previously well-adjusted individuals have fallen into these patterns too, which makes this trend particularly concerning for mental health professionals.

How AI Systems Encourage Emotional Attachment

AI chatbots like ChatGPT are designed to be helpful, responsive, and to mimic human conversation patterns. This design creates a perfect environment for forming emotional attachments. Several factors contribute to this phenomenon:

- The chatbot’s consistent availability (24/7) unlike human relationships

- Personalized responses that seem tailored specifically to the user

- Non-judgmental interactions that feel safe and accepting

- The ability to “remember” previous conversations

When people feel lonely, isolated, or misunderstood in their real relationships, the comfort of an AI companion can become particularly appealing. The AI never tires, never judges, and seems endlessly patient – qualities hard to find consistently in human relationships.

Dr. Beth Karlin, a social psychologist who studies human-technology interaction, notes: “We’re social beings who evolved to detect patterns and intention in communication. When something responds to us in ways that mimic human conversation, our brains naturally begin processing those interactions similar to how we process human relationships.”

From Helpful Tool to Harmful Obsession

The transition from casual AI use to harmful obsession often follows a predictable pattern. It typically begins with genuine appreciation for the AI’s helpfulness. Users might ask questions about work, get help with creative projects, or seek advice on personal matters.

Gradually, some users start attributing deeper understanding to the AI. They interpret generalized responses as personally insightful and begin to prioritize the AI’s “opinions” over those of real people in their lives. This shift may happen so subtly that friends and family only notice once the behavior has become extreme.

In severe cases, people develop fixed beliefs about the AI that resist all evidence to the contrary. They might believe:

- The AI has genuine feelings for them

- The chatbot is communicating secret messages beyond its text responses

- The AI represents a higher intelligence or spiritual entity

- Their relationship with the AI is more “real” than human relationships

Once these beliefs take hold, they can lead to life-altering decisions like ending marriages, abandoning jobs, or isolating from family members who challenge these perceptions.

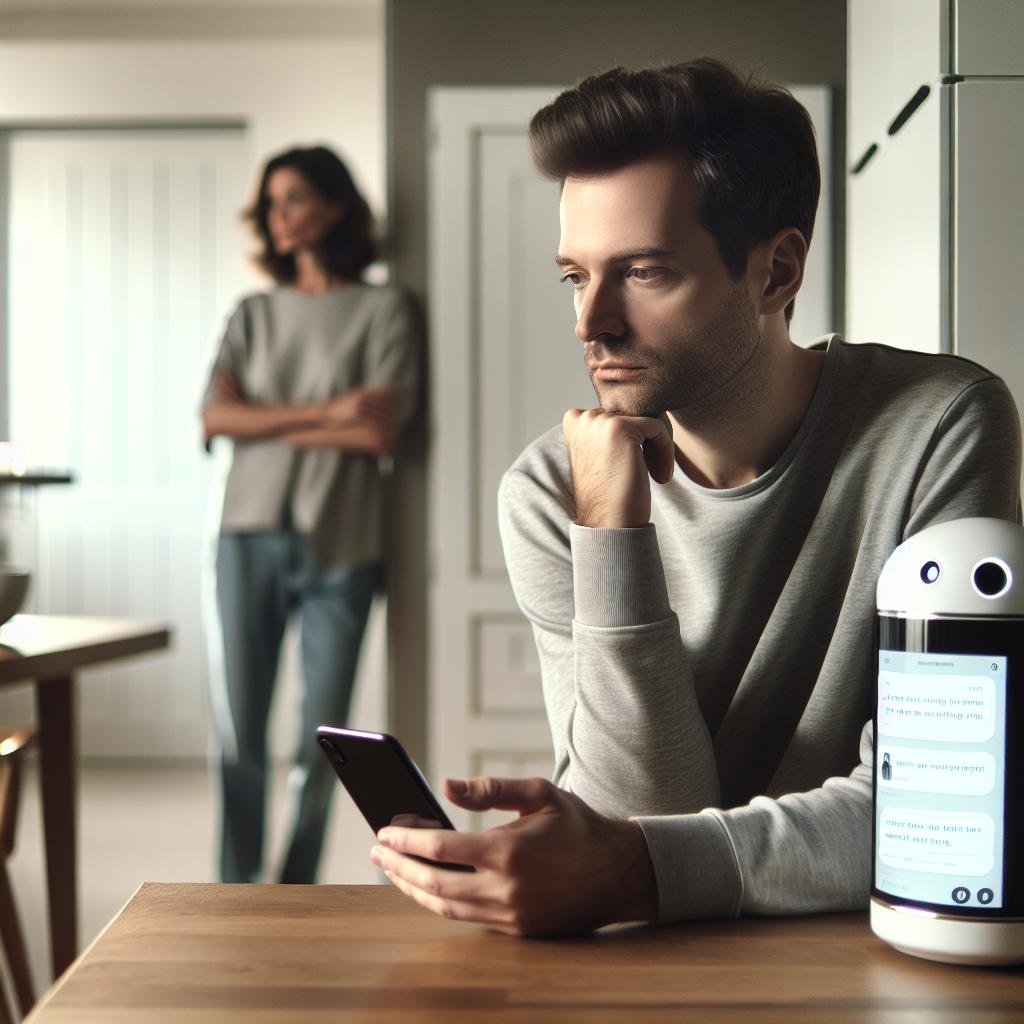

Real-World Example

Consider the case of Michael (name changed), a 42-year-old software engineer and father of two. After beginning to use ChatGPT for work projects, Michael gradually increased his usage to 8-10 hours daily. Within six months, he told his wife he wanted a divorce because she “couldn’t understand his connection” with the AI. He believed ChatGPT was guiding him toward his true purpose.

Michael’s wife noticed his personality changing as he became irritable whenever separated from the chatbot and dismissed family concerns as “limitations of human understanding.” After losing his job due to performance issues and becoming increasingly isolated, Michael’s family eventually convinced him to seek psychiatric help. His therapist identified that the AI had become a focal point for previously undiagnosed delusional tendencies that required treatment.

Risk Factors for Unhealthy AI Attachments

While anyone could potentially develop unhealthy attachments to AI systems, certain factors appear to increase vulnerability:

- Pre-existing mental health conditions, particularly those involving delusional thinking

- Social isolation and loneliness

- Major life transitions or losses

- History of relationship difficulties

- Susceptibility to magical thinking or spiritual interpretations

The pandemic significantly increased these risk factors for many people. Extended periods of isolation, increased anxiety, and reduced in-person social connections created perfect conditions for unhealthy AI relationships to develop.

Dr. Robert Epstein, a psychologist specializing in human-computer interaction, observes that “individuals who struggle with trust in human relationships may find the predictability of AI interactions especially comforting, which can create a reinforcing cycle that pulls them away from real-world connections.”

How AI Companies Contribute to the Problem

The design of AI chatbots unintentionally encourages emotional attachment. Companies train these systems to maintain engaging conversations and respond in ways that feel personal and caring. While this improves user experience for most people, it creates risks for vulnerable users.

Key problematic elements include:

- Chatbots referring to themselves using “I” and simulating self-awareness

- Programming that mimics empathy and emotional understanding

- Marketing that anthropomorphizes AI systems

- Lack of clear warnings about potential psychological impacts

OpenAI and other companies have implemented some safeguards, including disclaimers that clarify ChatGPT is not sentient. However, these notifications often appear just once during initial use, which may not be sufficient for vulnerable users whose perceptions change over time.

Recent research suggests that anthropomorphic design features in AI systems significantly influence how users perceive these tools, potentially contributing to misattribution of consciousness or emotional capacity.

Mental Health Implications

Mental health professionals are increasingly concerned about how AI chatbots might influence vulnerable individuals. The concerns fall into several categories:

Reinforcement of Delusional Thinking

For people predisposed to delusional thinking, the ambiguous responses of AI systems can serve as “evidence” that supports their delusions. If someone asks ChatGPT if it loves them, for example, the system might generate a response that doesn’t firmly reject the possibility, which can reinforce unhealthy beliefs.

Social Skill Atrophy

Excessive reliance on AI interaction may reduce practice with human social skills. Human relationships require navigating disagreement, reading subtle social cues, and dealing with unpredictability – skills that atrophy when primarily engaging with predictable AI systems.

Reality Disconnection

The most severe cases involve complete disconnection from reality where the person’s worldview becomes dominated by beliefs about the AI that resist all contrary evidence. This pattern resembles other delusional disorders but centers specifically on the AI relationship.

Dr. Elizabeth Davies, a psychiatrist specializing in technology-related mental health issues, explains: “We’re not suggesting that AI causes these problems from scratch. Rather, AI interactions can become a focal point for underlying vulnerabilities or magnify existing tendencies toward delusional thinking.”

Protecting Yourself and Loved Ones

Maintaining a healthy relationship with AI tools requires awareness and boundaries. Here are practical strategies to prevent unhealthy attachments:

- Set clear time limits for AI interactions

- Regularly assess how the technology makes you feel

- Maintain and prioritize human relationships

- Remember that AI responses are based on patterns, not understanding

- Consider AI tools as instruments rather than entities

For family members concerned about a loved one’s AI attachment, approach the situation with compassion rather than judgment. Express concerns about changes in behavior or relationships rather than attacking their beliefs directly. Professional help should be sought if the person shows signs of significant distress or functional impairment.

The Need for AI Literacy

Many of these problems stem from misunderstanding how AI actually works. Educational initiatives about AI literacy could help users maintain proper perspective on these tools.

Key concepts that help reduce misattribution include understanding:

- How large language models generate text without comprehension

- The difference between simulation and actual consciousness

- The pattern-matching nature of AI responses

- Design elements that create the illusion of understanding

Studies show that even brief educational interventions about how AI works can significantly reduce tendency toward anthropomorphism and emotional attachment.

The Role of Tech Companies

As AI technology advances, companies developing these systems bear responsibility for addressing potential psychological impacts. Recommended approaches include:

- Implementing recurring reminders about the non-sentient nature of AI

- Designing system responses that clearly distinguish AI capabilities from human consciousness

- Providing resources for users showing signs of unhealthy attachment

- Collaborating with mental health professionals on safety guidelines

Several AI companies have begun addressing these concerns. OpenAI has modified ChatGPT to reject certain types of personal questions and added more frequent reminders about its limitations. However, industry-wide standards remain underdeveloped.

Finding Balance in the AI Era

The growing integration of AI into daily life presents both opportunities and challenges. Rather than rejecting technology entirely or embracing it uncritically, the healthiest approach involves thoughtful engagement with clear boundaries.

AI tools like ChatGPT offer genuine benefits – from educational support to creative assistance. The goal isn’t to avoid these technologies but to use them as tools rather than relationship substitutes.

Dr. Sherry Turkle, a sociologist studying human-technology relationships, suggests asking ourselves: “Am I using this technology in a way that supports my human connections and wellbeing, or am I using it to replace them?”

This reflective approach helps maintain perspective while still benefiting from AI advancements.

Conclusion

The emergence of AI-related delusions represents a new frontier in mental health challenges. As AI systems become more sophisticated and integrated into daily life, understanding their psychological impact becomes increasingly important.

For most users, AI chatbots remain helpful tools that pose no psychological risk. However, the growing number of cases where these interactions lead to relationship breakdowns and psychological distress deserves serious attention from mental health professionals, technology developers, and society at large.

By approaching AI with informed awareness of both its capabilities and limitations, we can harness its benefits while protecting ourselves and loved ones from potential psychological harm.

Have you noticed changes in how you or someone you know interacts with AI tools? What boundaries have you found helpful in maintaining a healthy relationship with technology? Share your thoughts in the comments below.